Manifest

Part 0 - Introduction

Part 1 - Failing Fast (Building with prompts)

Part 2 - MVP and Deployments *You are Here 💁♀️

Part 3 - MVPolish

Part 4 - So what.

re: Cap

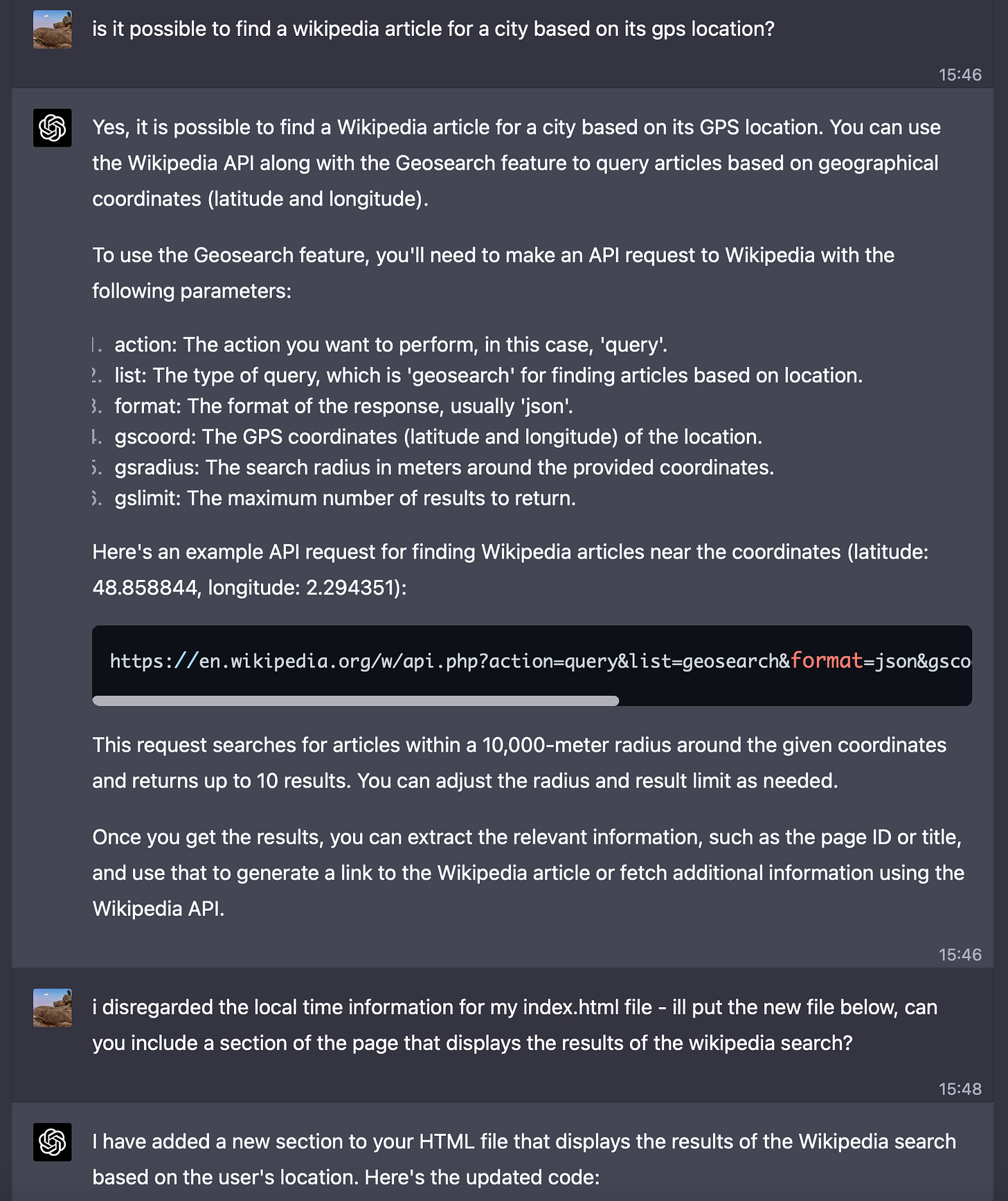

We just got the backend Lambda function to return a valid JSON object and now it’s time to build out an MVP. We’re only using GPT-4 to write code and it’s taking way more questions than it really should to build what we need.

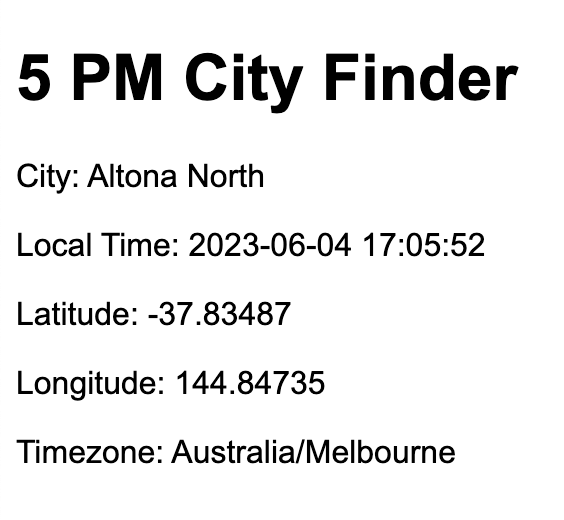

Here’s what we have so far:

Here’s where we’re going:

I’m calling this practice LLM-Driven-Development. It's not exactly a virgin birth new kind of an idea, but I'm pushing it to the brink, to the edge of the precipice where people with too much time on their hands the daring, and reckless tread.

When used to accelerate your own efforts, LLM’s in a development setting work very well! Take a gander at CodeWhisperer. Its been forged in the fires of this very crucible, purpose-built for this kind of heavy lifting.

But let's not get ahead of ourselves. As of the current date, mid-2023, with the state of LLMs as they stand, it's a bit of a stretch, a leap of faith, to anticipate an entire project to spontaneously spring forth from the ether.

It's like expecting a perfect Coq au Vin to materialize out of thin air - you need the right ingredients, the right chef, and the right amount of time.

So, let's roll up our sleeves, get our hands prompts dirty, and see what we can cook up with LLM-Driven-Development.

Frontend

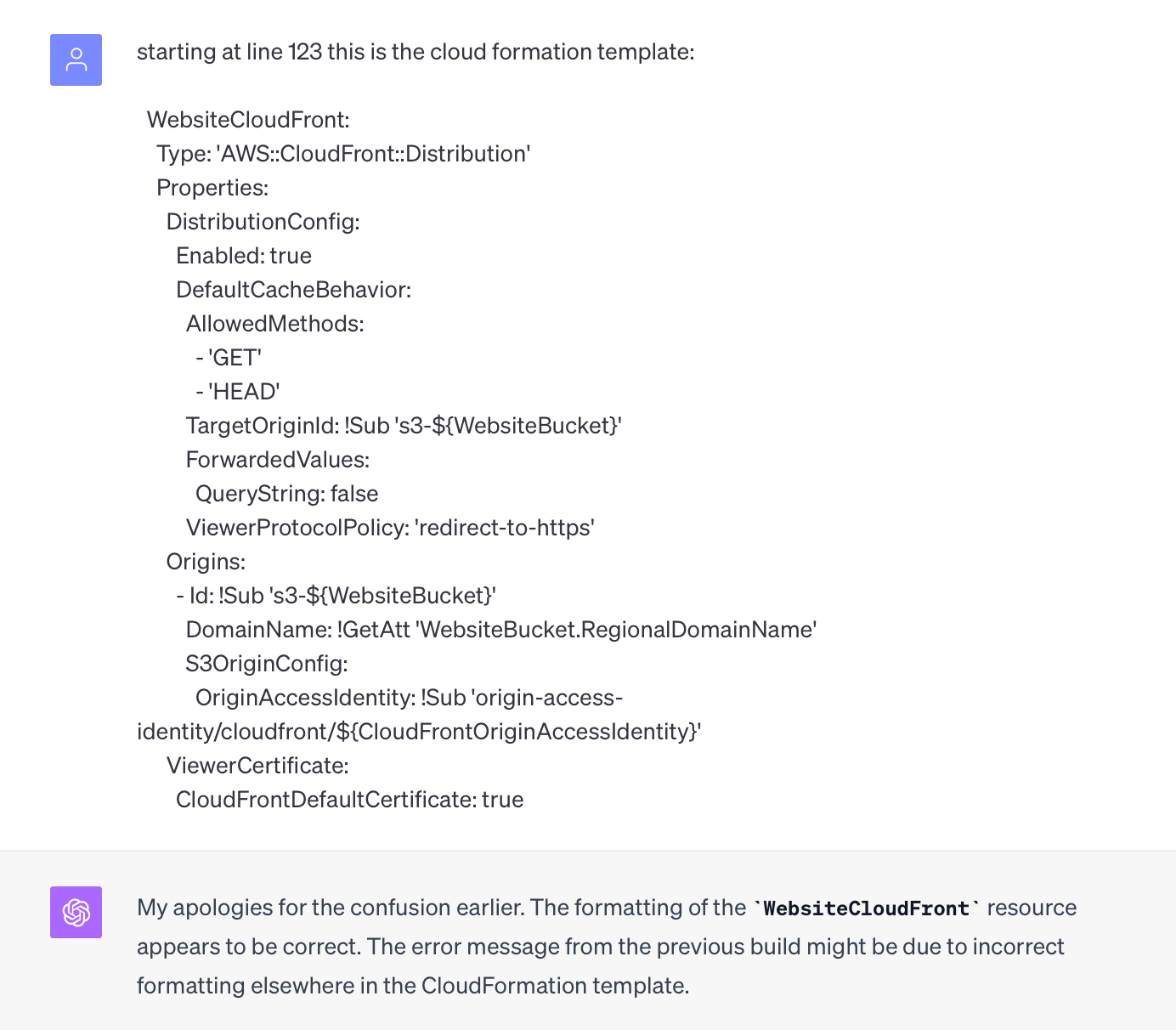

Now let’s try making an MVP frontend served by API-Gateway. API Gateway is a fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale, allowing communication between an application and AWS services using HTTP, REST, and WebSocket protocols.

GPT-4 hands me a lengthy guide for setting up an API-Gateway via the AWS console. Until now, Infrastructure as Code (IaC) hasn't been employed, and truth be told, we've already rolled past the second third weekend.

As of this writing, GPT-4 has a limit of 25 messages every three hours, and I'm not going to resort to GPT-3.5. (Hi this is Will, from the future, I started writing this back in late March of 2023. That limit is gone now and also Code Interpreter Exists; additionally, I’ve moved on to using AWS Foundational Models, CodeWhisperer, and Bedrock for the majority of my LLM Driven Development exploration)

I'm sure I'd face a chorus of remarks about the superiority of GPT-4 over 3.5 and how “this isn’t a test of the latest version” and blah.. blah.. blah so I waited the 3 hours to get 25 more questions out so I can keep you happy.

I asked 443 questions in this thread alone, which at 25 questions per 3 hours is (443/25*3)~=53hrs - I spent a lot of time waiting.

In the mix of tasks, I've also requested GPT-4 to create a basic index.html file for backend testing purposes. After wrestling with the 'float vs decimal' conundrum again for a good ten messages, we've finally arrived at our first version of the index.html. Let's take a look at our initial test page.

You can try this page out yourself here:

https://findyourfivepm.com/index-original.html

I’ve kept the future API backwards compatible.

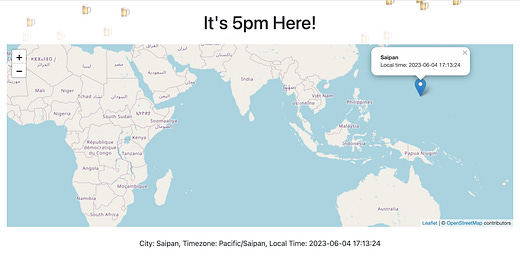

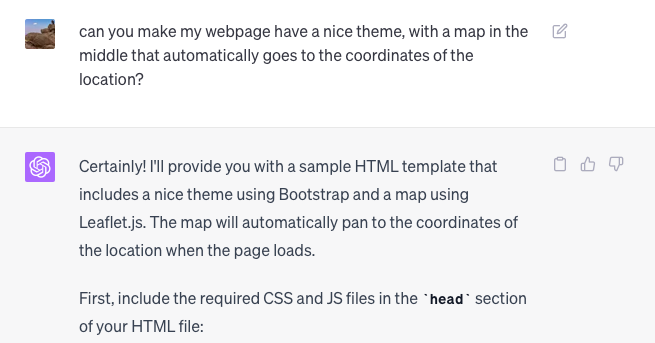

Feeling bold, I decide to spice things up. I request the integration of a theme and a map in the page that navigates to the specified coordinates. Immediately, GPT-4 responds with a barrage of CSS and JavaScript.

Feeling confident, I ask GPT-4 to add a unique background effect, making it look like it's raining 🍺 emojis.

Remarkable.

This page is also still online here: https://findyourfivepm.com/index-old.html

Two consecutive commands, and the execution is spot on. Surprisingly, not only has GPT-4 implemented OpenStreetMaps with Leaflet aptly without any explicit instruction to use those tools, but the page also presents itself rather nicely. I’ve officially reached the 'proof of concept' or MVP stage. Honestly, I'm rather impressed.

It's been two three weekends of collaborative coding with GPT-4, and we've already barely, just barely, crafted a compelling webpage.

Our Lambda is still pretty, pretty slow. Let’s see how Chat GPT can improve it

Improving the Lambda

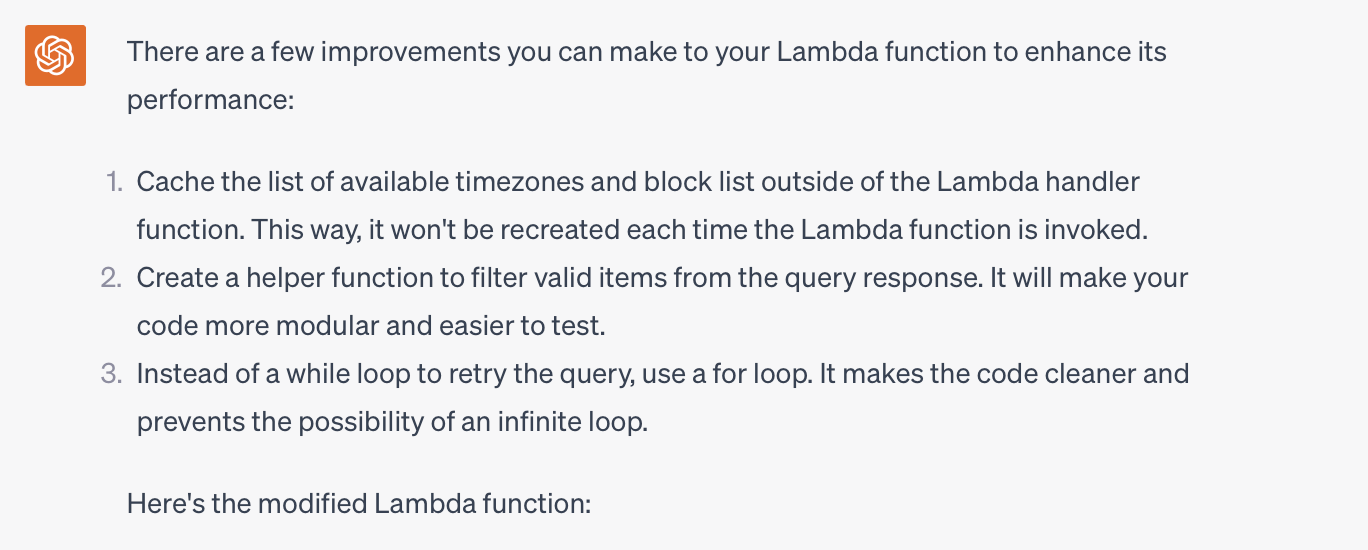

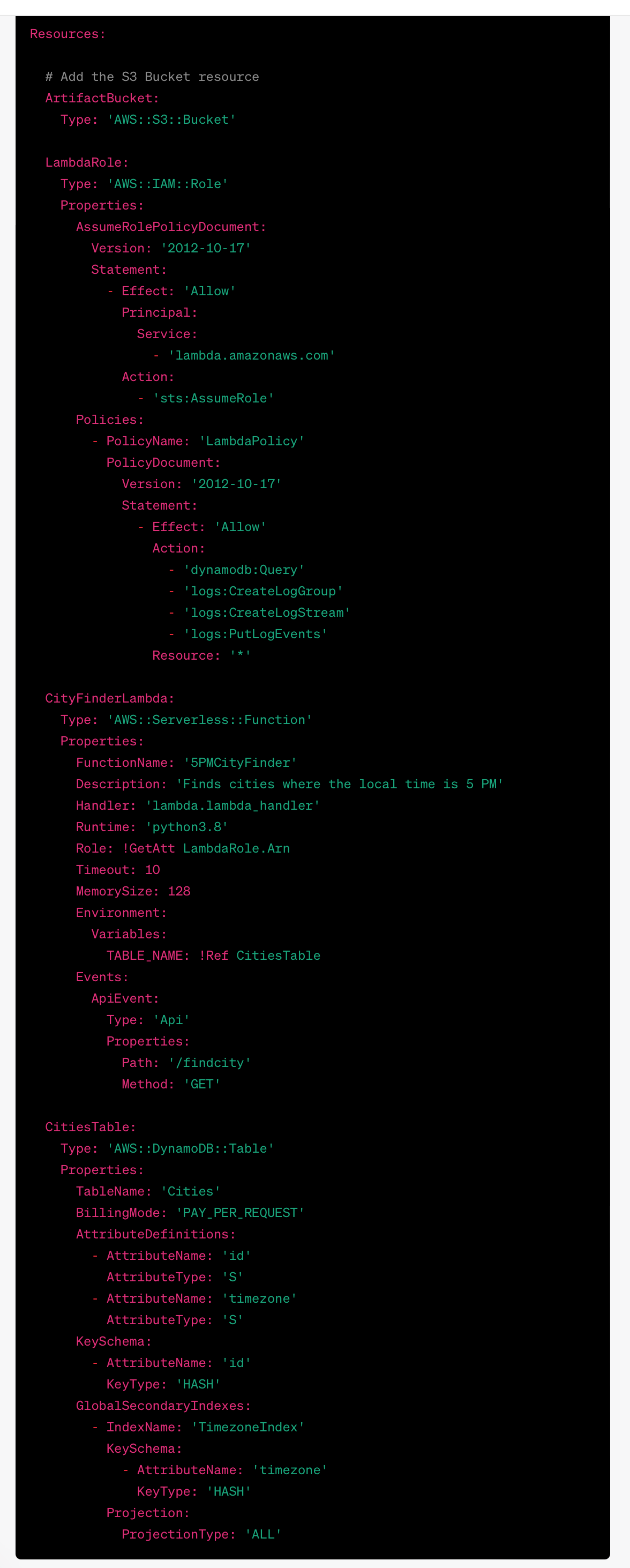

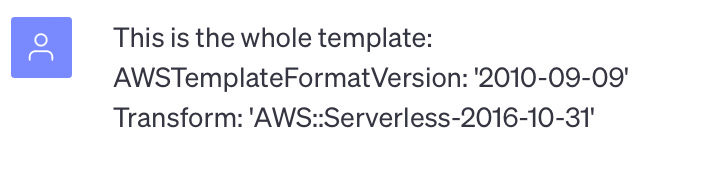

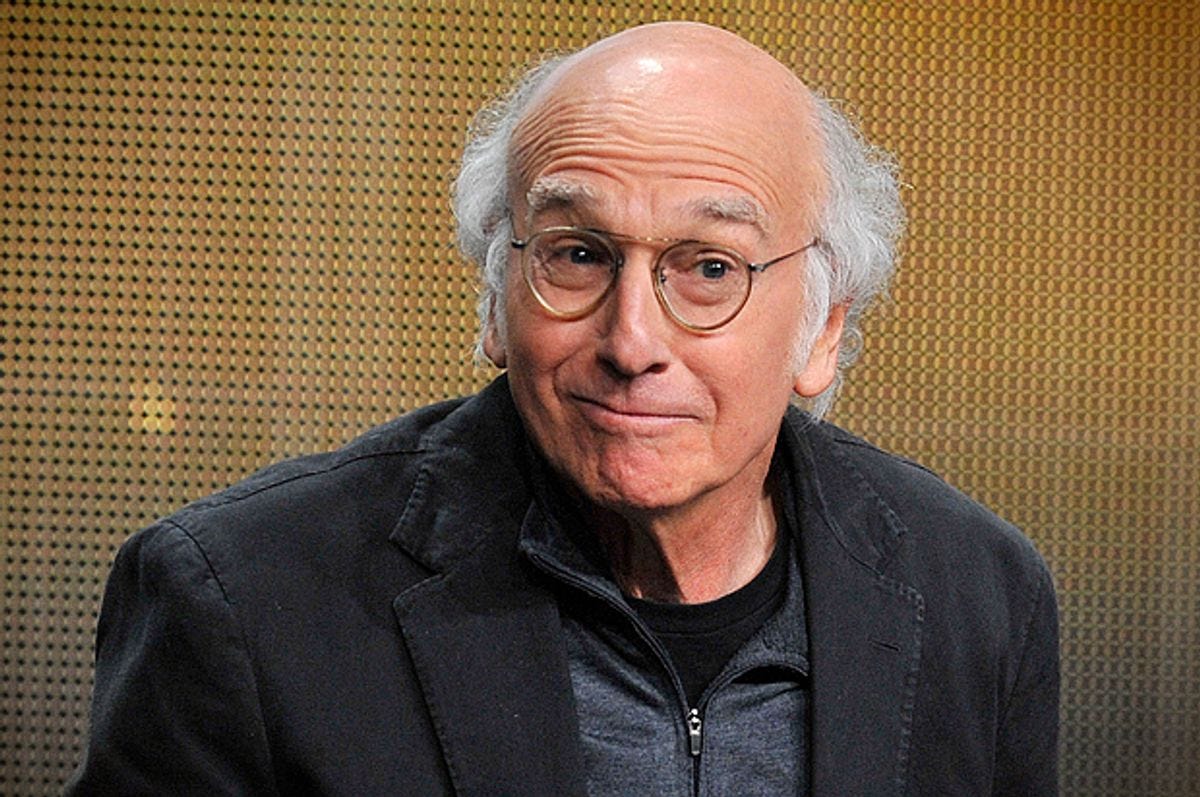

Don’t worry too much about the first line “I don’t think I want to do that:” - I was experimenting with Step Functions and ultimately decided to keep the scope simpler. I’ve asked for performance improvements for the lambda.

You’ve probably noticed I keep pasting in the code. It’s annoying, and necessary.

I constantly refresh the context of the LLM with the content of the code we’re talking about - I also include details about how the data is structured in the DynamoDB table - this really seems to help when debugging as it is likely that the code I have provided previously is no longer fully contained in the context of my messages.

Oh yeah? How does Context Length work? Glad you asked, dear reader. Glad you asked.

Imagine that you and your friend go to dinner. You start the meal talking about one thing and then as the meal progresses, so does your conversation. You discuss many different topics and without a defined agenda you both end up just talking about what was on your mind. By the end of the dinner you probably can’t remember verbatim what was said but you can remember how you felt, many important selective details, and a broad idea of how the conversation went. Unless you’re Mike Ross then context length functions very similarly to how humans do.

What is Context Length?

In simple terms, context length refers to the amount of recent information a language model can “remember” or “consider” during its current operation. This information could be a couple of sentences, a full paragraph, or even more.

The longer the chatbot's memory, the better it can understand and respond to multi-sentence queries, ensuring more satisfying user interactions.

Introducing Langchain

As we rely more on chatbots and LLMs, ensuring they have a continuous understanding of the conversation becomes crucial. Enter 'langchain' - a conceptual tool that chains the dialogue, making sure the model remains updated with the ongoing context.

Langchain ensures that even when a conversation spans over multiple turns, the chatbot can retain the context. It's like giving the chatbot a tool to revisit past conversations and stay updated, allowing it to provide answers that are not just relevant to the last sentence but to the entire conversation.

Tips for Keeping the Chatbot Current with Context

Be Explicit: While LLMs are designed to understand context, sometimes being more explicit in your statements can help.

Chunk Information: If you're providing a lot of details, try breaking it into smaller, related chunks. This way, you allow the chatbot to process each bit of information more efficiently.

Recap Occasionally: Just like in a human conversation, recapping can be beneficial.

Now Back to the Lambda.

The very first item in the list is really the only one that is going to help us with performance. We should move things outside the lambda handler if possible. This won’t help with cold starts, but it will help with each subsequent invocation. For instance, if you’re using an SDK that needs to be initialized just once, like a database connection, or need to load some resources like machine learning models, keeping this kind of code outside the handler improves performance.

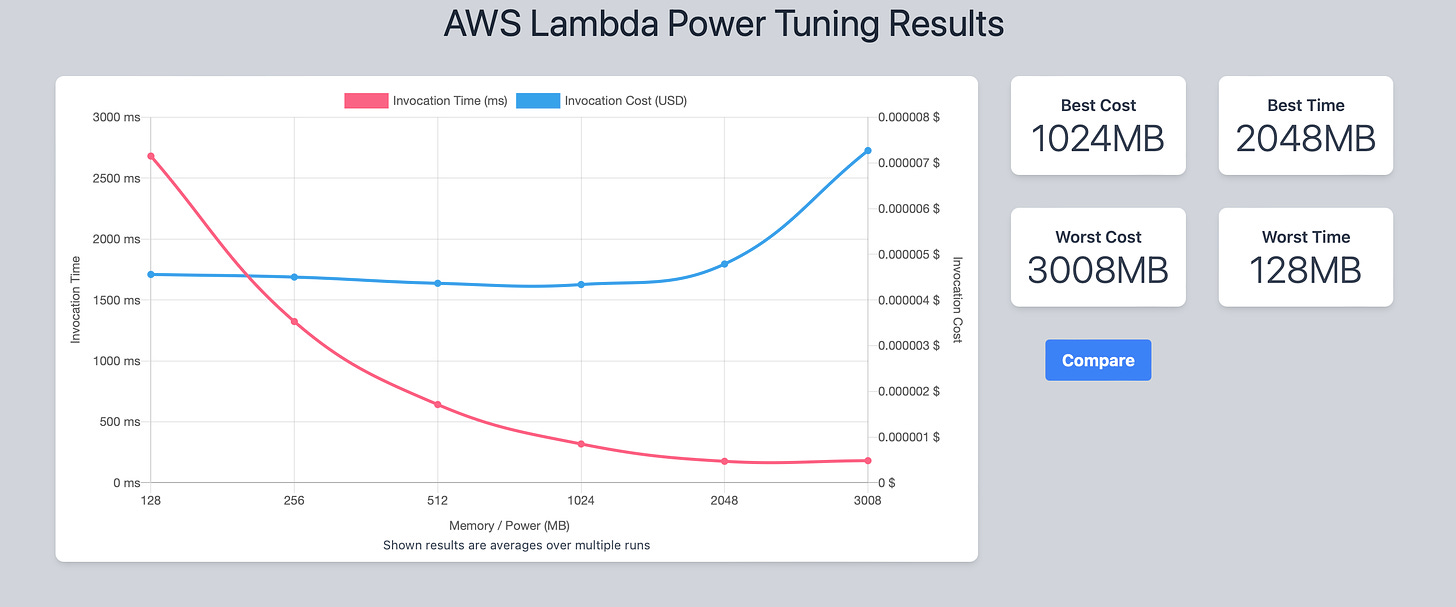

The real laziest performance gain comes from Lambda Power Tuning.

AWS Lambda Power Tuning is an optimization tool for balancing the speed and cost of Lambda functions by automating memory allocation tests. It employs AWS Step Functions to run multiple function versions concurrently at different memory allocations, measuring performance through live HTTP calls and SDK interactions. Graphing the results helps visualize performance and cost trade-offs, aiding in better configuration decisions.

This is because Lambda's resources (CPU, memory, disk, and network) are allocated in proportion to each other. Therefore, by increasing the memory size, you not only get more memory but also a proportional increase in CPU power, which can lead to faster execution times for CPU-bound tasks. I highly recommend using Lambda Power Tuning on all your lambda functions especially after a big update.

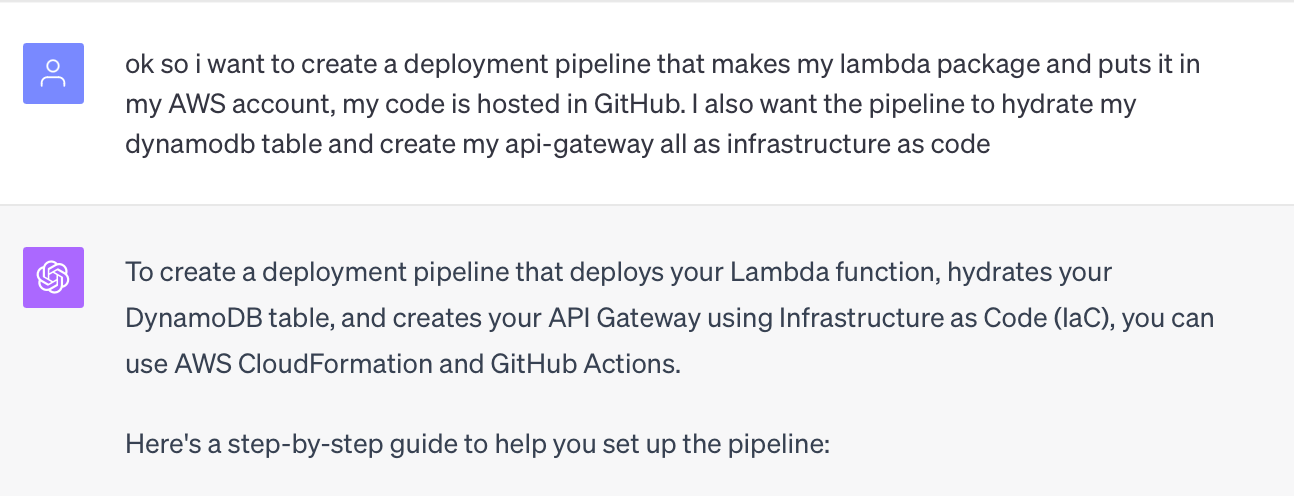

Now that our main Lambda function is working well; lets move on to IaC

IaC - CloudFormation

Well, I’ve now asked my inaugural IaC question. Remember when I talked to you about refreshing the context to ensure maximum results? The resulting CloudFormation template thinks the lambda is written in Node and forgot that way earlier in the project, literally 3-4 weeks ago, I suggested we use AWS CodeBuild and CodePipeline. We’re now using GitHub Actions. I spend a few messages refreshing ChatGPT and telling it that we’re using Python (and pasting in the relevant code) and that I’d like to use CodeBuild and CodePipeline with my CloudFormation template.

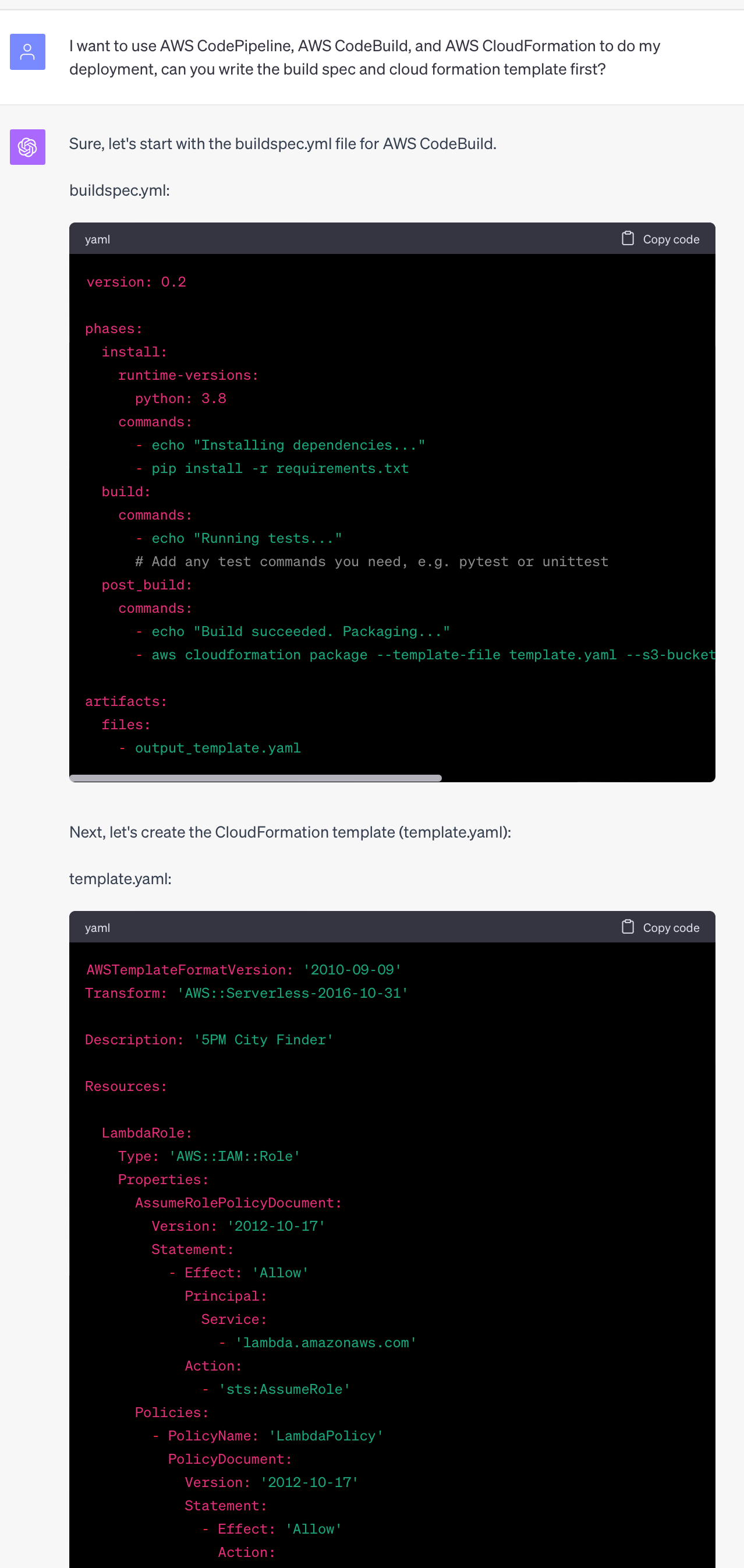

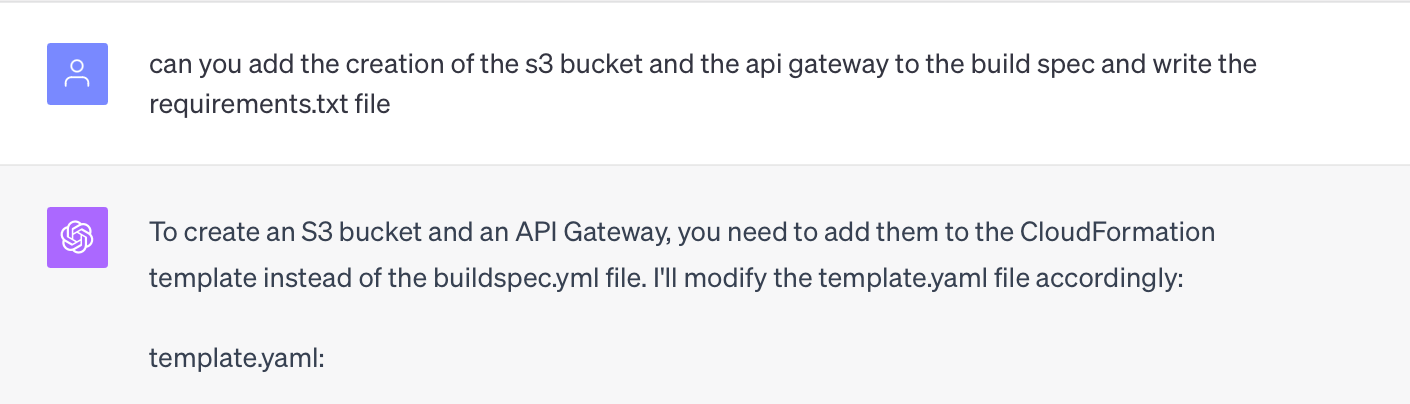

OK. That got us somewhere, the first CloudFormation template now has:

LambdaRole for IAM permissions

CityFinderLambda - the actual Lambda function

CitiesTable - in DynamoDB

I also have a basic buildspec.yaml that will install our requirements for the lambda and deploy the CloudFormation template.

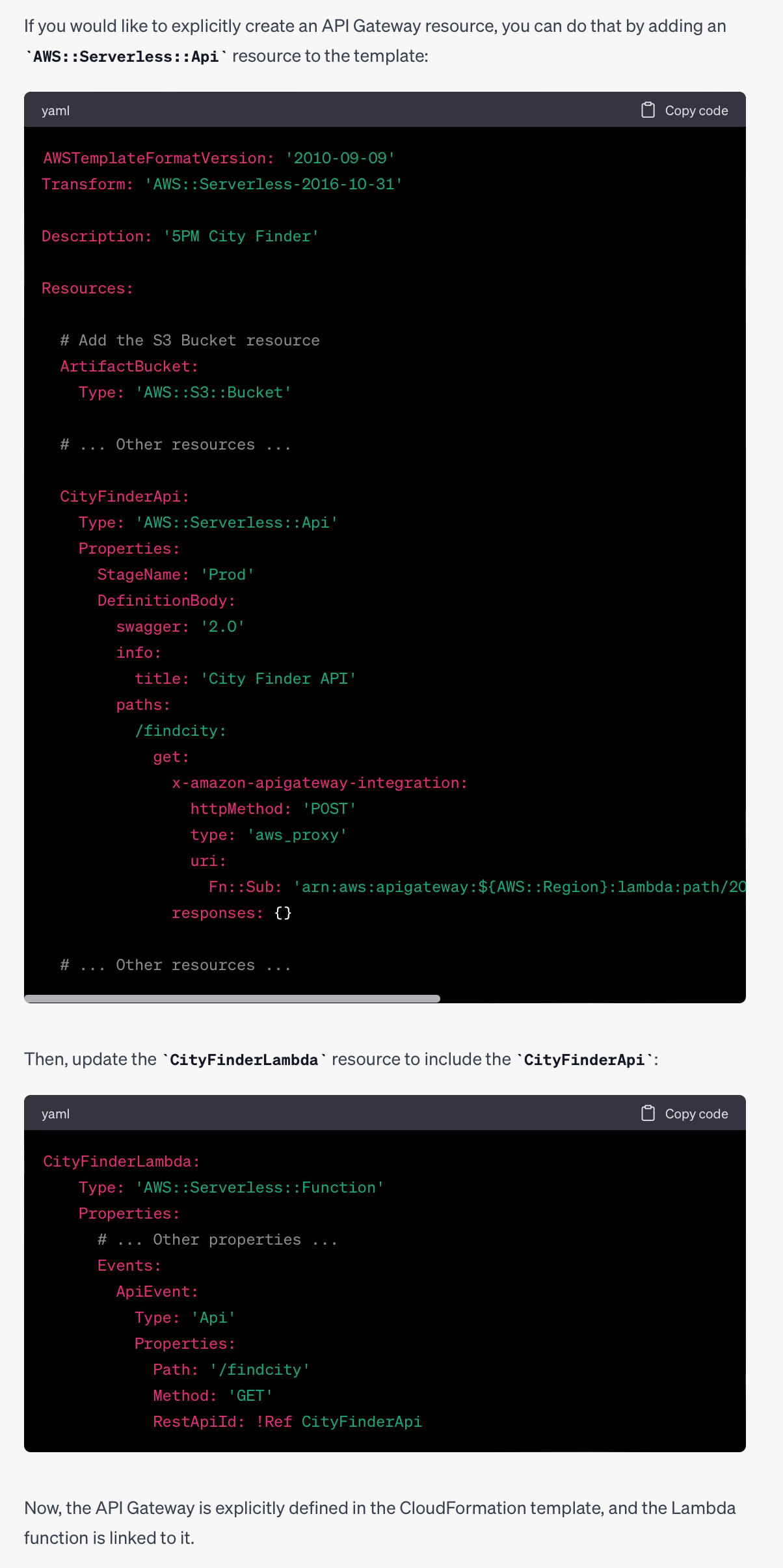

I ask to add the S3 Bucket for packaged CloudFormation templates and the API Gateway.

This does not add the requested API-Gateway explicitly. Instead it defines the API-Gateway as part of the AWS::Serverless::Function resource within the Events section. This is perfectly valid, but does not do what I want for the future maintainability of this project, as I want the flexibility to configure the API-Gateway in other ways. A simple follow up message and this is cleared up.

I spend the next 2 weeks here.

I failed flailed around with a stupid idea. In my index.html I have a section that looks like this

<script>

function createRain() {

const numberOfDrops = 100;

const rainContainer = document.querySelector(".rain");

for (let i = 0; i < numberOfDrops; i++) {

const drop = document.createElement("span");

drop.innerHTML = "🍺";

drop.classList.add("drop");

drop.style.left = Math.random() * 100 + "vw";

drop.style.animationDuration = Math.random() * 6 + 4 + "s";

drop.style.animationDelay = Math.random() * 2 + "s";

rainContainer.appendChild(drop);

}

}

document.addEventListener("DOMContentLoaded", function () {

const apiUrl = "https://abcde12345.execute-api.ap-southeast-2.amazonaws.com/prod/find_location";

createRain();

// Initialize the map

const map = L.map("map").setView([0, 0], 5);

L.tileLayer("https://{s}.tile.openstreetmap.org/{z}/{x}/{y}.png", {

attribution: '© <a href="https://www.openstreetmap.org/copyright">OpenStreetMap</a> contributors',

}).addTo(map);

// Fetch city information and update the map and text

fetch(apiUrl)

.then((response) => response.json())

.then((data) => {

const { city, local_time, latitude, longitude, timezone } = data;

const initialZoomLevel = 19;

const finalZoomLevel = 9;

map.setView([latitude, longitude], initialZoomLevel);

const marker = L.marker([latitude, longitude]).addTo(map);

marker.bindPopup(`<b>${city}</b><br>Local time: ${local_time}`).openPopup();

document.getElementById("city-info").innerText = `City: ${city}, Timezone: ${timezone}, Local Time: ${local_time}`;

// Smooth zoom out using flyTo method

map.flyTo([latitude, longitude], finalZoomLevel, { duration: 10 });

})

.catch((error) => {

console.error("Error fetching data:", error);

});

});

</script>The part we’re concerned with is:

document.addEventListener("DOMContentLoaded", function () {

const apiUrl = "https://abcde12345.execute-api.ap-southeast-2.amazonaws.com/prod/find_location";Since we’re deploying as IaC into a blank account, I don’t know the URL of the API-Gateway before the index.html file exists. I’m not really interested in using parameter store or doing anything correctly fancy with this part since this is likely to never change once deployed; but I do something stupid anyway. Since I have free labour with ChatGPT, I setup the audacious goal of having my CloudFormation Stack change this code (not the build spec, which would have been SOOO much easier) at deployment time. This is where I spend over a week. Completely stalled out.

ChatGPT leads me into a world many know of but few love. CloudFormation Custom Resources. More on this later? No, it’s boring. I’ll wrap it up here.

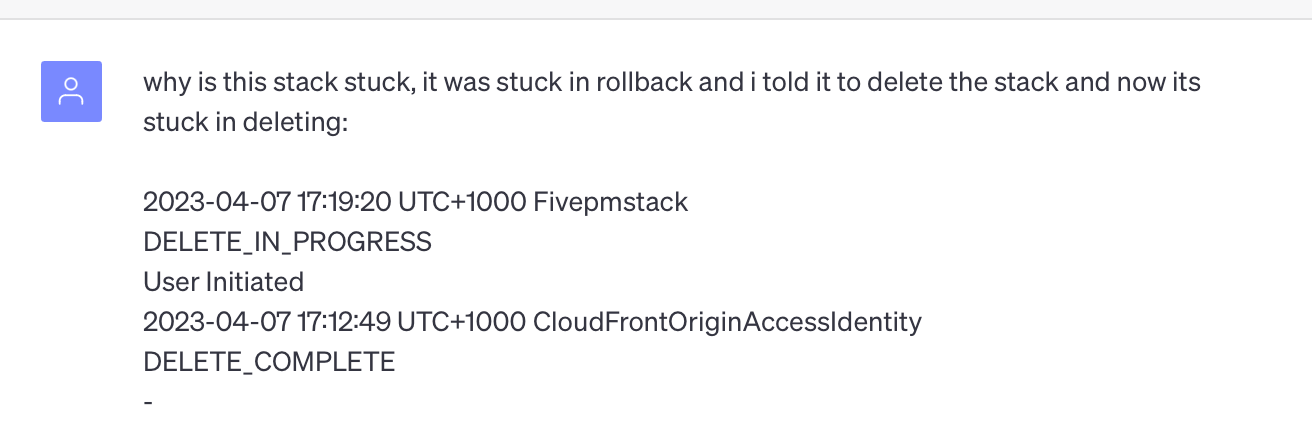

I end up getting my stack in a stuck state for multiple hours at a time. There is nothing you can do if your stack is stuck. I even ended up paying for AWS Support to tell me so.

I abandon the idea.

After I’m done with my migraine inducing journey, I keep going with actual development.

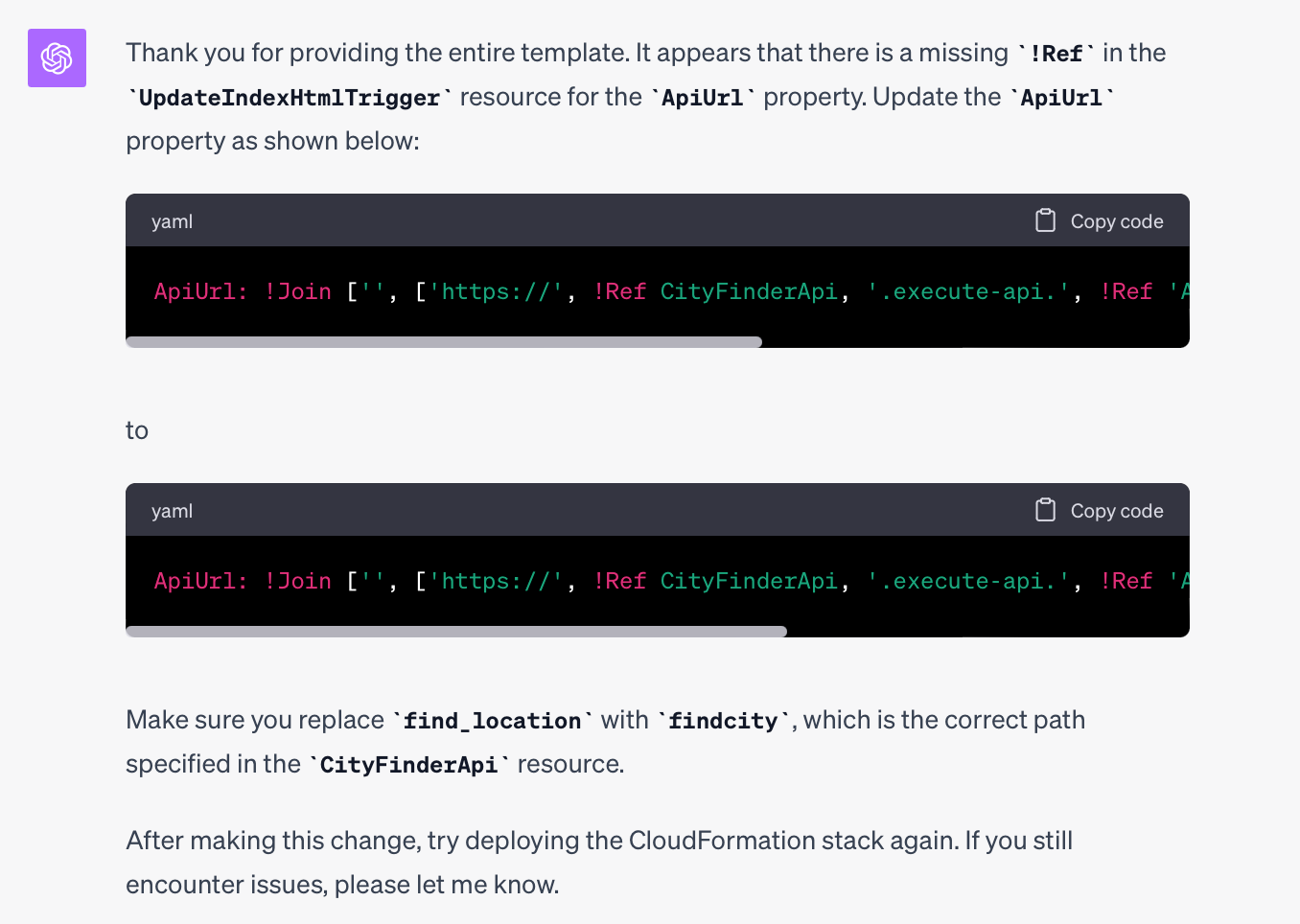

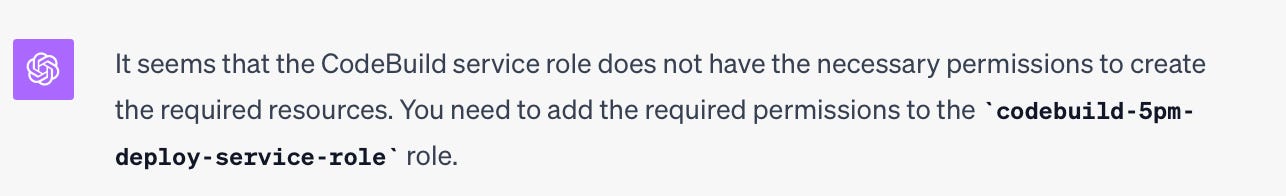

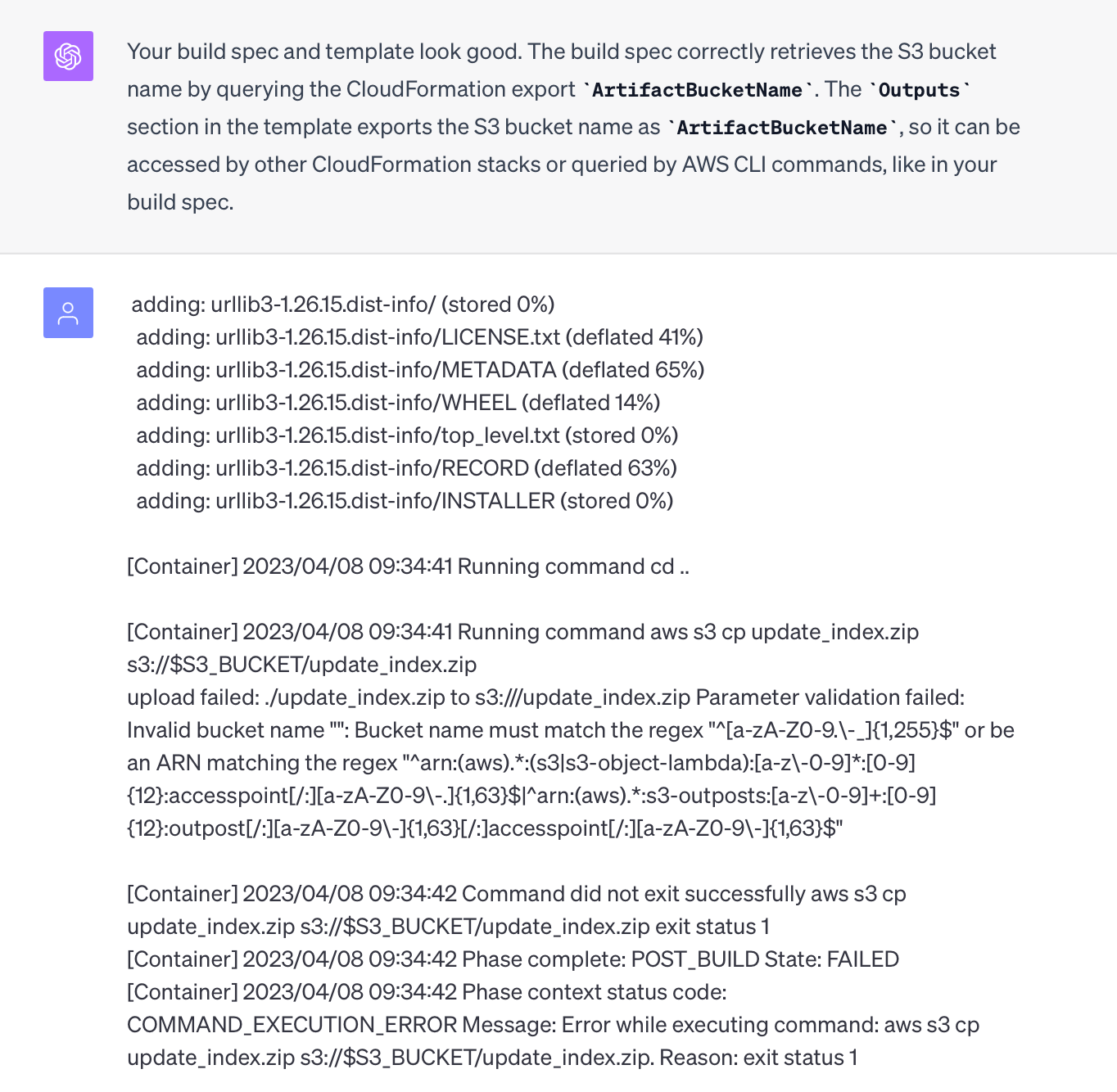

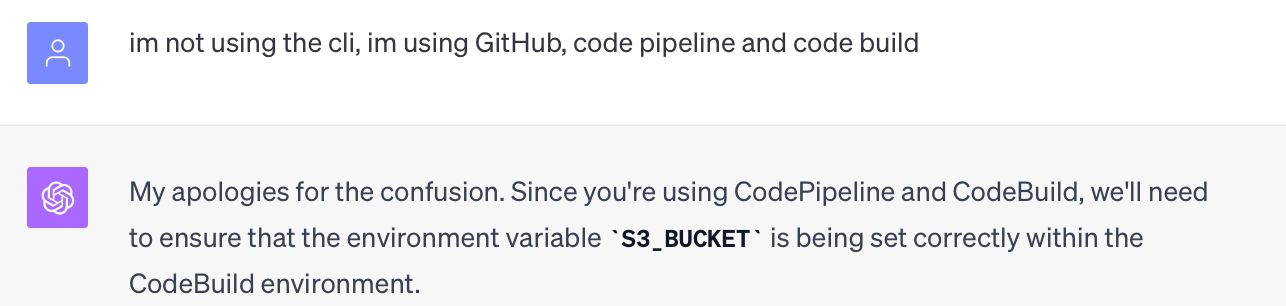

I spend the next weekend updating the CloudFormation stack. Getting CodeBuild and CodePipeline Setup and dialing in the IAM permissions via Trial and Error. I paste all deployment errors into ChatGPT and make only its suggested changes.

This is infuriating for me. I’ve been using CloudFormation for years, and I have the issue right in front of me. I know the answer and I’m powerless to do anything about it. Yet I watch as we brute force our way thru solutions that are simple changes.

Here is a montage - there are at least 100 more messages like this.

That last one was particularly frustrating! There are over 100 more messages involved in getting the IaC setup properly. This took me over 2 weeks.

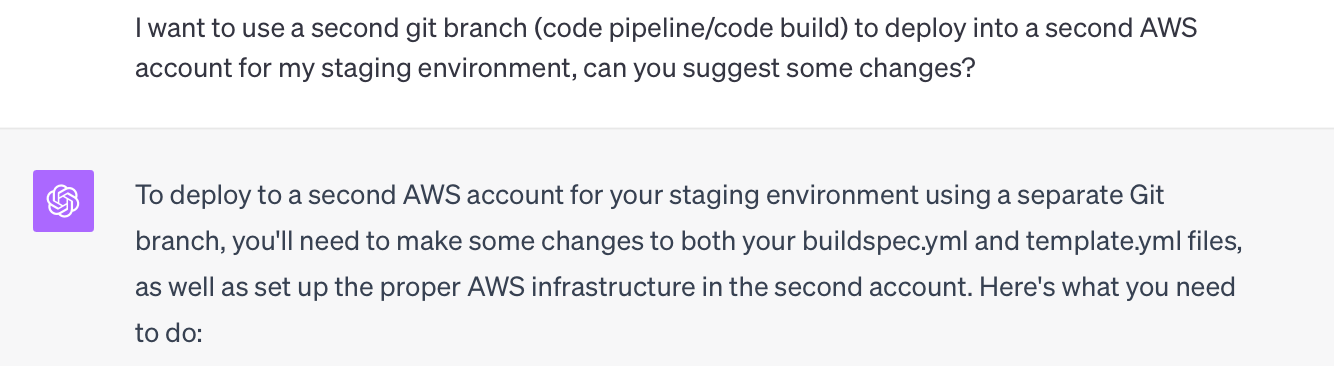

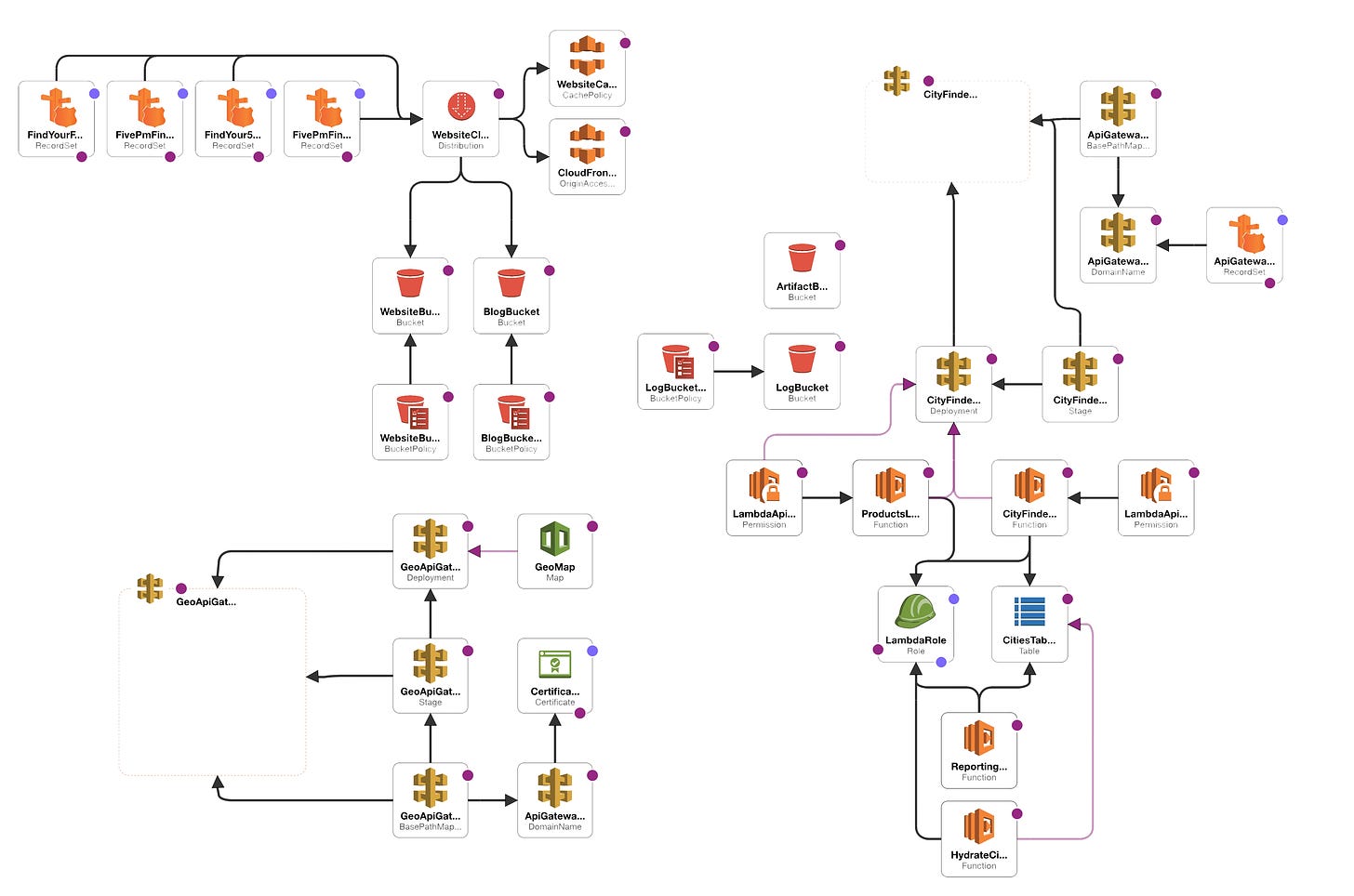

With a completed CloudFormation Stack deployed into the main account I also get to work at setting up a second account for my Staging environment.

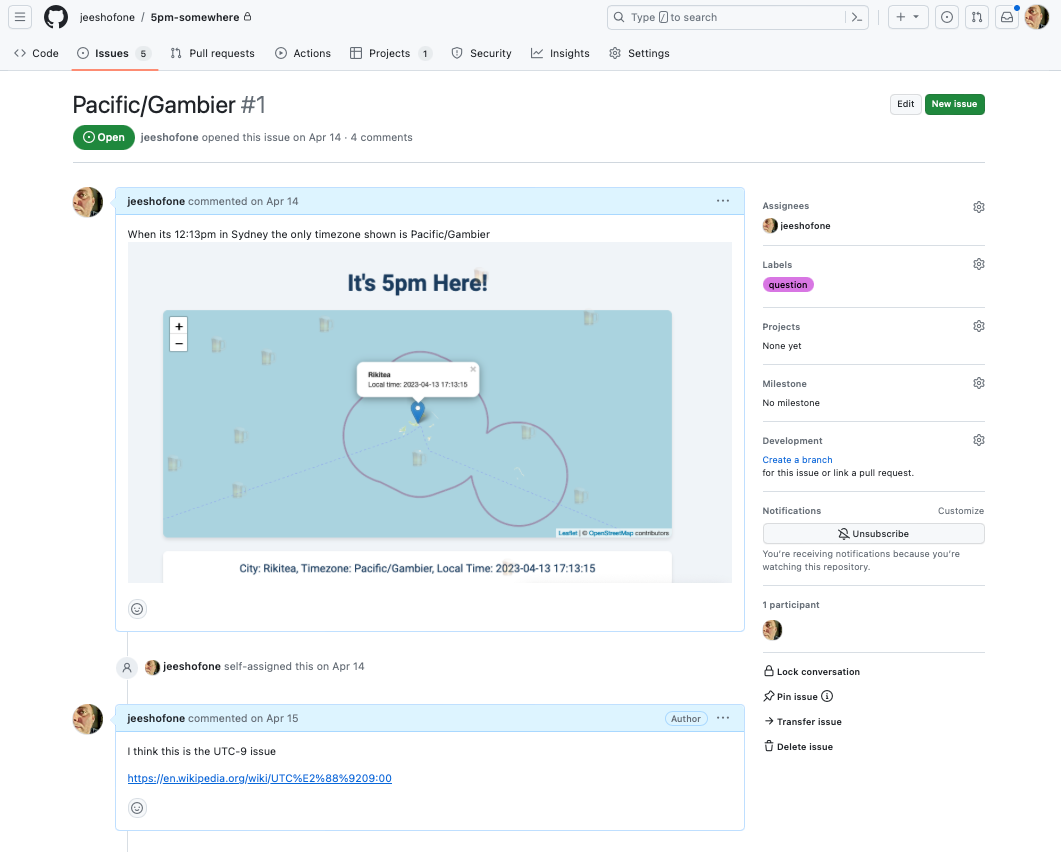

ChatGPT makes a few minor changes to my page and we’re now looking like we have an MVP. The biggest change is a custom javascript function that can search poorly Wikipedia for the most relevant article of the current location. (more on this later)

We might as well teach our viewer something while they look for an excuse to drink a beer. Surprisingly this only takes 2 12 ask’s for ChatGPT to get it right.

You can still view this version of the page here: https://findyourfivepm.com/index-old.html

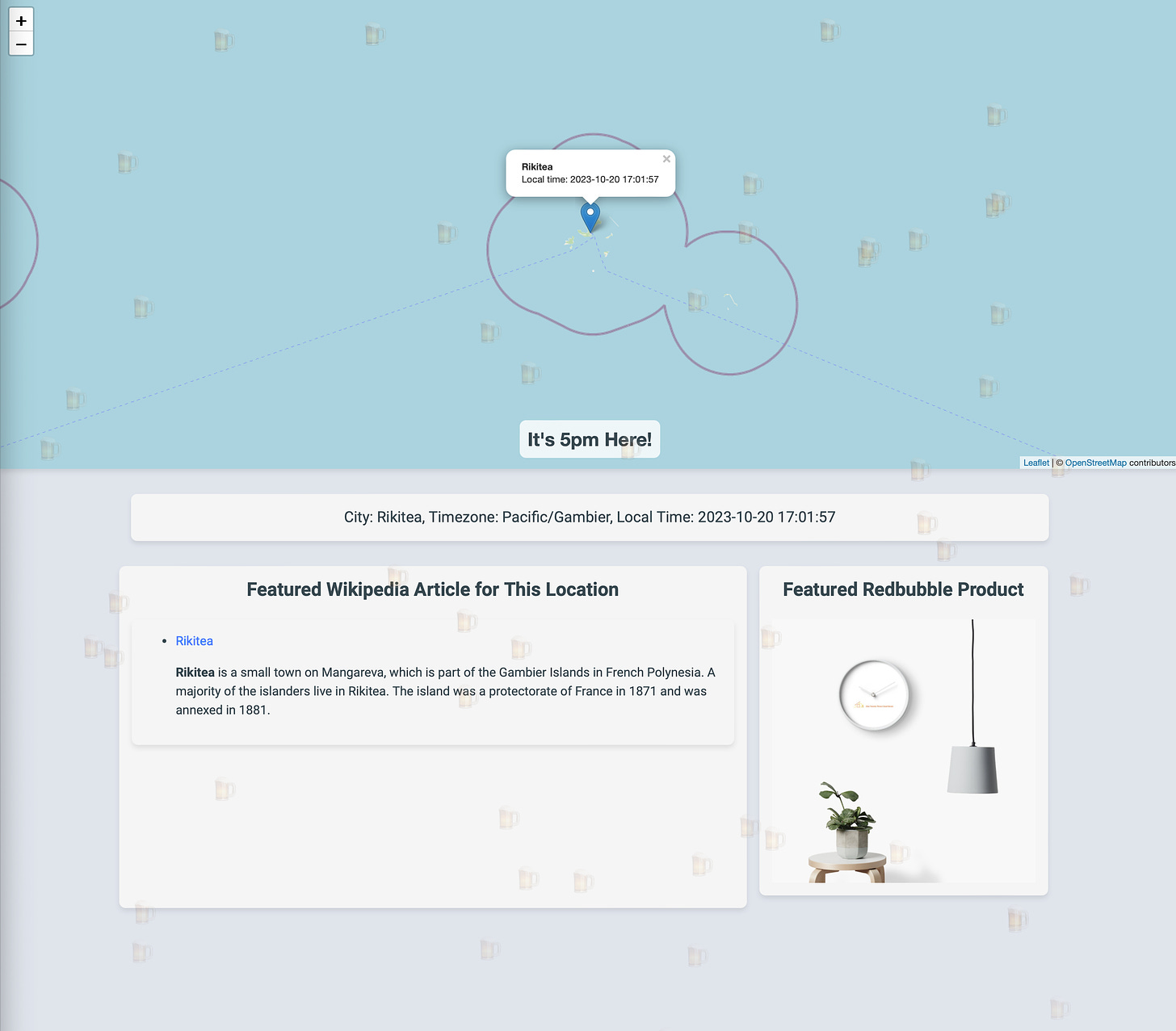

Fun fact - Rikitea is the subject of my first GitHub Issue in this project. During sometimes of the year, it’s actually the only place in the world with a population over 500 observing 5pm! I thought this was a bug, or shitty data, but it’s actually a real phenomenon.

They have the world’s loneliest time zone at UTC-9. You can read more about that here: https://findyourfivepm.com/blog/rikitea-the-sometimes-exclusive-celebrant-of-five-pm-at-utc-9.html

I should probably close my GitHub Issue…

Current Status

I have now created:

A full CloudFormation Stack deploying automatically in 2 AWS accounts, Production and Staging

CityFinder Lambda Serving the API Request to get the Current City at 5pm

A DynamoDB table with over 198,000 cities and their locations

A Hydrate Lambda that gets data from http://www.geonames.org and hydrates the DynamoDB table

An API-Gatway to interface the CityFinder Lambda.

A Basic HTML front end that allows me to visualize the results.

All relevant IAM Roles and Permissions

Custom Domain Names for the service with CloudFront serving SSL certificates and API-Gateway serving Cities and in the production environment using CORS and SSL.

It's now time to polish this up a bit. I am using the “free” version of OpenStreet Maps. I can’t use this in production at scale, it’s against the terms of service and my users if I ever get any will get rate-limited. I also found that after spending a lot of time working with ChatGPT that it failed to get any better at web page creation. Organizing visual elements and making something appealing for a user to look at is well beyond its current capabilities.

It’s time to take our MVP to the next level.

See you in Part 3.

Manifest

Part 0 - Introduction

Part 1 - Failing Fast (Building with prompts)

Part 2 - MVP and Deployments *You are Here 💁♀️

Part 3 - MVPolish

Part 4 - So what.

I’m sure you want to see each and every prompt; but there are hundreds if not thousands of prompts and I couldn’t possibly paste them here…. oh, ChatGPT has link sharing - as a special offer for paid subscribers, you can access the enthralling conversations below.

Keep reading with a 7-day free trial

Subscribe to One Twenty Three Cloud Street to keep reading this post and get 7 days of free access to the full post archives.

![To create an API Gateway in front of your Lambda function, follow these steps: Sign in to the AWS Management Console and open the Amazon API Gateway console. Choose Create API. Select REST API and click Build. Choose New API and provide a name and description for your API. Then click Create API. In the Actions menu, choose Create Resource. Provide a resource name and a path for the resource, and click Create Resource. With the new resource selected, choose Create Method from the Actions menu, and select GET. In the GET method configuration, choose Lambda Function for the Integration type, select the Use Lambda Proxy integration checkbox, and select the region where your Lambda function is deployed. Start typing the name of your Lambda function in the Lambda Function field, and select it from the dropdown list. Click Save and confirm the permission setting to allow API Gateway to invoke your Lambda function. In the Actions menu, choose Deploy API. Choose [New Stage] for the Deployment stage and provide a name for the new stage (e.g., "prod"). Click Deploy. After the deployment is complete, you will see the Invoke URL at the top of the stage editor. This is the base URL for your API. Now, you can use the Invoke URL to call your API Gateway from a static webpage. To do this, you can use JavaScript to make an AJAX request and display the information on the page. Here's a sample HTML template to get you started: To create an API Gateway in front of your Lambda function, follow these steps: Sign in to the AWS Management Console and open the Amazon API Gateway console. Choose Create API. Select REST API and click Build. Choose New API and provide a name and description for your API. Then click Create API. In the Actions menu, choose Create Resource. Provide a resource name and a path for the resource, and click Create Resource. With the new resource selected, choose Create Method from the Actions menu, and select GET. In the GET method configuration, choose Lambda Function for the Integration type, select the Use Lambda Proxy integration checkbox, and select the region where your Lambda function is deployed. Start typing the name of your Lambda function in the Lambda Function field, and select it from the dropdown list. Click Save and confirm the permission setting to allow API Gateway to invoke your Lambda function. In the Actions menu, choose Deploy API. Choose [New Stage] for the Deployment stage and provide a name for the new stage (e.g., "prod"). Click Deploy. After the deployment is complete, you will see the Invoke URL at the top of the stage editor. This is the base URL for your API. Now, you can use the Invoke URL to call your API Gateway from a static webpage. To do this, you can use JavaScript to make an AJAX request and display the information on the page. Here's a sample HTML template to get you started:](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F38ed2fe3-40e3-44b3-8dd8-bd296cf3d3ff_1390x1162.png)

![I don't think I want to do that: can you suggest ways to improve the performance of the lambda: import boto3 import random from botocore.exceptions import ClientError from datetime import datetime import pytz import os import json # this is how we hydrated the table """ def hydrate_data(): try: with open('cities.json', 'r') as file: cities = json.load(file) for city in cities: city['latitude'] = Decimal(str(city['latitude'])) city['longitude'] = Decimal(str(city['longitude'])) city['id'] = f"{city['city']}_{city['timezone']}" # Update this line to use 'city' instead of 'name' table = dynamodb.Table('Cities') table.put_item(Item=city) print("Data hydrated successfully.") except Exception as e: print(f"Error hydrating data: {e}") """ # sample json # {"city": "les Escaldes", "timezone": "Europe/Andorra", "latitude": 42.50729, "longitude": 1.53414}, {"city": "la Massana", "timezone": "Europe/Andorra", "latitude": 42.54499, "longitude": 1.51483} ######## dynamodb = boto3.resource('dynamodb') table_name = os.environ['TABLE_NAME'] table = dynamodb.Table(table_name) I don't think I want to do that: can you suggest ways to improve the performance of the lambda: import boto3 import random from botocore.exceptions import ClientError from datetime import datetime import pytz import os import json # this is how we hydrated the table """ def hydrate_data(): try: with open('cities.json', 'r') as file: cities = json.load(file) for city in cities: city['latitude'] = Decimal(str(city['latitude'])) city['longitude'] = Decimal(str(city['longitude'])) city['id'] = f"{city['city']}_{city['timezone']}" # Update this line to use 'city' instead of 'name' table = dynamodb.Table('Cities') table.put_item(Item=city) print("Data hydrated successfully.") except Exception as e: print(f"Error hydrating data: {e}") """ # sample json # {"city": "les Escaldes", "timezone": "Europe/Andorra", "latitude": 42.50729, "longitude": 1.53414}, {"city": "la Massana", "timezone": "Europe/Andorra", "latitude": 42.54499, "longitude": 1.51483} ######## dynamodb = boto3.resource('dynamodb') table_name = os.environ['TABLE_NAME'] table = dynamodb.Table(table_name)](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fb130121e-5d57-4d70-bc76-05aad48b5f6c_1390x1474.png)